Automated Social Media Moderation In Focus Following Allegations Of Censorship

Panelists say they’ve been censored on social media — and they point to platforms’ auto moderation.

June 2, 2021–Social media platforms that have automated moderation policies have been wittingly or unwittingly censoring legitimate speech, according to activists, with those corporate tools coming into focus following last month’s violence in the Middle East.

Platforms like Facebook and its subsidiary Instagram, as well as others, have moderation systems that automatically flag and remove posts that may encourage hate speech or violence.

But those systems has been taking down, blocking and censoring content from Palestinians, made evident as violence erupted between Israelis and Palestinians last month and continues today, according to a panel hosted by the Middle East Institute Wednesday.

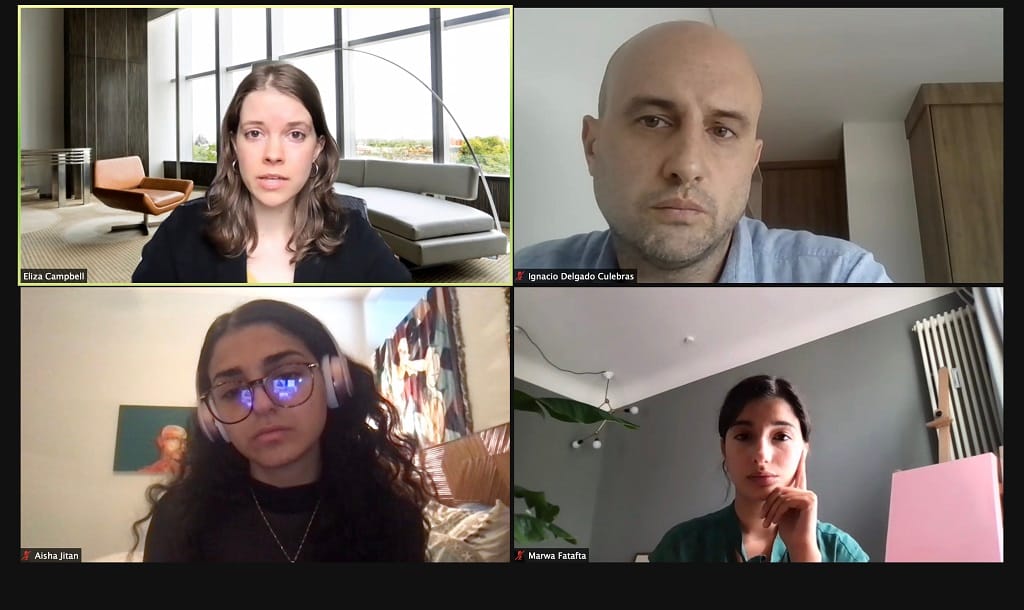

Words have been incorrectly misinterpreted as terrorist speech, flagged and removed, the panelists say. Middle East policy analyst Marwa Fatafta used the example of the erroneous association of Islam’s third-holiest mosque, Al-Aqsa in Jerusalem, to a terrorist organization. This error led to blocked hashtags, removed users, and deleted posts, and Facebook’s response was that it was just a “technical glitch.”

“Their machines are blind to the vital context,” Fatafta said. “This is not unique to the Palestinians. This is bad news to all aspects of social justice.”

Palestinians have said that their perspective has not been reflected adequately in traditional media, and they have taken to social media as a way to get their message across.

The discussion comes as conversations heat up about possible reforms to Section 230, the legal provision governing platform liability for what users posts.

In a time of such violence, Fatafta explains this is a profound problem from a human rights perspective that needs to be addressed immediately by these large companies. She said the danger of the power being given to these big tech companies is that hey can choose the narrative they want the world to hear, and censor what they deem unacceptable.

Ignacio Delgado Culebras, a journalist covering the Middle East and North Africa, said there needs to be more transparency with these social media platforms. He explained we are still left in the dark about how companies make these decisions and who they consult with, and thousands of requests over the years to adjust the community standards have been denied.

“These are ultimately human policy decisions, and they can be addressed or reversed,” said Eliza Campbell, an associate director at the Middle East Institute. “These are systems that we chose, and we can choose to reconsider them, and hopefully, that will be something we can see going forward.”