Labeling and Rating Potentially Harmful AI Systems Is Inherently Complex, Say Brookings Panelists

October 5, 2020 — Germany has attempted to improve awareness of the potential degree of consumer harm that artificial intelligence systems cause by developing categories about the degree of environmental, economic, and societal impact it could cause. To hear expert opinions on whether policymakers a

October 5, 2020 — Germany has attempted to improve awareness of the potential degree of consumer harm that artificial intelligence systems cause by developing categories about the degree of environmental, economic, and societal impact it could cause.

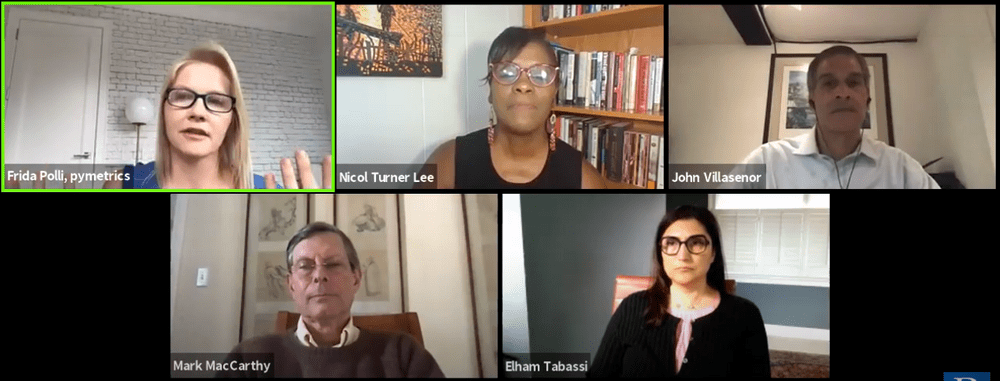

To hear expert opinions on whether policymakers and stakeholders in the United States should adopt similar models and utilize AI certifications, ratings and labeling, the Brookings Institute hosted a panel on Thursday to consider potential “blind spots.”

“We need some sort of testing or auditing mechanism,” said Elham Tabassi, chief of staff of the information technology laboratory at the National Institute of Standards and Technology.

“Standards for AI are lacking right now,” Tabassi said, and experts “don’t know how to test AI systems for bias.”

Mark MacCarthy, adjunct faculty of communication, culture, and technology at Georgetown University, detailed what has traditionally occurred when industry applied ethics systems in an attempt to manage new forms of media or technology.

The Platform for Privacy Preferences Project, or P3P, was “a system developed when the public was worried about safety online, which allowed users to opt out of online tracking,” said MacMarthy.

Screenshot from the Brookings Institution webinar

The idea was that websites would post their privacy policies in P3P format and web browsers would download them automatically and compare them with each user’s privacy settings.

In the event that a privacy policy did not match the user’s settings, the browser could alert the user, block cookies, or take other actions automatically. Yet, the system “did not come to anything.”

“A vast number of systems have been proposed for ethics of artificial intelligence,” said MacMarthy. “The rating system is not well developed, but I hope this conversation can help it,” he said.

MacCarthy maintained that he held two reservations. First, he noted that most non-mandatory ethics regulations have failed because of a lack of industry buy-in. It “would need to be mandatory,” as “industry has no reason to use it,” said MacCarthy.

Second, “even with coercion the objective of an AI rating system is to give consumers more information about these systems, and to be honest, that may not be enough,” he concluded.

“There are so many complications in the AI process, it makes it extremely difficult,” to pinpoint where systems go wrong, said John Villasenor, nonresident senior fellow of governance studies at the Center for Technology.

One issue, according to Villasenor, is that AI systems are “produced by commercial entities, to make a profit.”

“Many companies don’t put their source code on the internet because they want to remain competitive,” he said. Further, there are over “500,000 lines of code, it may not be easy to figure out exactly what the algorithm is doing.”

The panelists did not rally around the German approach of looking at high risk algorithms and labeling them.

“How to decide if something is high risk?,” questioned Elham. He also wondered if labeling and categorizing AI would create a false sense of complacency.