Public Knowledge’s Internet Superfund is a Vaccine Against Toxic Misinformation and Conspiracy Theories Run Rampant

June 4, 2020 — In a video that quickly went viral, discredited medical researcher Judy Mikovits claimed that the novel coronavirus was intentionally unleashed upon the world by Bill Gates’ elite cabal and that wearing masks can worsen COVID-19 symptoms, among other conspiracies. Facebook, YouTube an

June 4, 2020 — In a video that quickly went viral, discredited medical researcher Judy Mikovits claimed that the novel coronavirus was intentionally unleashed upon the world by Bill Gates’ elite cabal and that wearing masks can worsen COVID-19 symptoms, among other conspiracies.

Facebook, YouTube and other platforms quickly scrambled to scrub “Plandemic,” which had already generated millions of views, from their platforms. But in doing so, they jiggled a hive of conspiracy theorists who have since redoubled their efforts in accelerating the spread of misinformation.

The rise and rapid spread of completely false and yet weirdly compelling news documentaries presented as truth is an example of how toxic the internet seems to have become. It’s a perfect example of what inspired the Washington-based advocacy group Public Knowledge to propose an internet “Superfund” that would “clean up” other people’s toxic messes — just as the original Superfund did in the 1980s.

‘Plandemic’ shows ‘how complex misinformation has become’

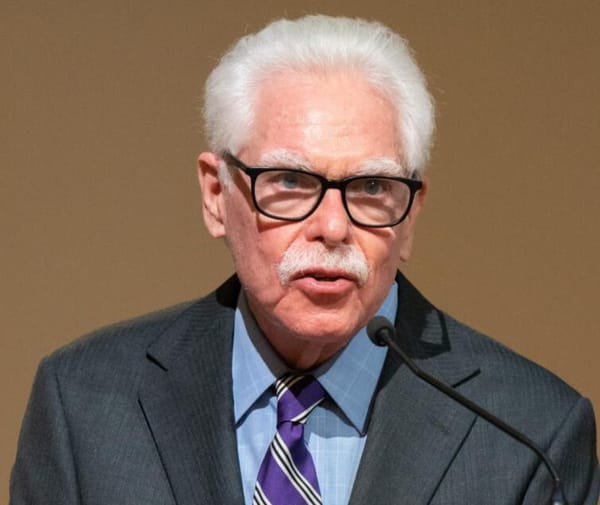

“‘Plandemic,’ I think, is a wonderful model of how complex misinformation has become,” said Lisa Macpherson, a senior fellow at Public Knowledge and lead researcher on the organization’s Superfund. Public Knowledge is a 19 year old organization that promotes freedom of expression, an open internet and access to affordable communications tools and creative works.

Photo of Public Knowledge Senior Fellow Lisa Macpherson courtesy @lisahmacpherson on Twitter

The video is “very professionally produced,” Macpherson told me in a phone interview. “It looked for all the world like a credible, professionally produced piece of content. It doesn’t have seedy production value.”

While I haven’t officially seen “Plandemic” – I didn’t want to lend credit or contribute to the spread of spurious information – I did watch a video by “Doctor Mike,” a real doctor who translates the knowledge of the medical community into accessible YouTube clips, including an analysis fact-checking the video, lie-by-lie.

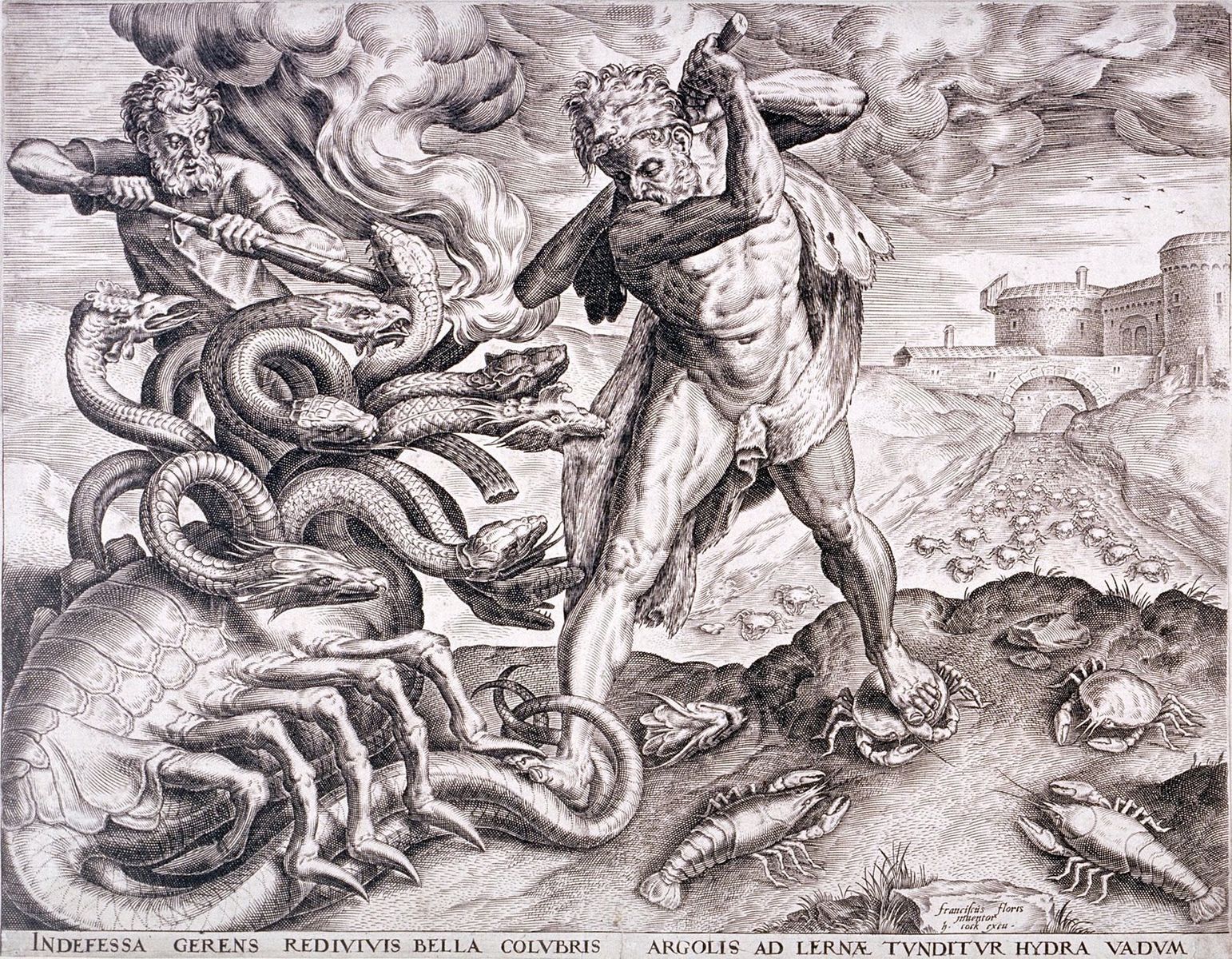

The seemingly straightforward action of striking down this video is made complicated by the hydra-like nature of conspiracy content on the internet: “If I see the phrase ‘Whac-A-Mole’ one more time…” Macpherson jokingly grumbled.

Screenshot of video “Doctor Fact-Checks PLANDEMIC Conspiracy”

Banned on big tech platforms, “Plandemic” resurfaces through conspiratorial relinks

“Plandemic” has resurfaced through links that redirect to a Google Drive file containing the video, through videos that have been re-edited to fool Facebook and YouTube AI content moderation or through full-length videos appearing on obscure websites.

It’s as if the hydra is sprouting new heads that host a third eye, a shorter neck or a new face.

Not only is the beast harder to kill, but it emboldens other creatures to spring forth from the abyss. “Plandemic” and similar misinformation stunts have “activated very strong and active anti-vax communities” who are often motivated to act in anticipation of a vaccine, Macpherson said.

Engraving of Hercules battling the hydra

If dangerous falsehoods about the coronavirus were to be accepted by even a small portion of the population, it would cause pockets of outbursts in the future.

It’s “quite literally life or death,” Macpherson said. In the long run, she added, it will likely cause “continued undermining in the belief of our authorities, the government, journalism [and] trust and belief in each other as citizens.”

An Internet Superfund to clean up other people’s toxic messes

“As the platforms have gained power and influence and a role in people’s lives, like many industries before them, [the big tech platforms] need to take accountability,” Macpherson said. That need for accountability, she said, motivates her research into a proposed Internet Superfund that would compel platforms to pay local journalists and fact-checking organizations to perform an information-detox service.

Public Knowledge’s Superfund is designed to call to mind the Superfund enacted in the 1980s by the Environmental Protection Agency. That Superfund identifies parties responsible for hazardous substances released into the environment and either compels polluters to clean up the sites or bills them polluters for a clean-up service provided by another organization.

The proposal is accompanied by another effort of Public Knowledge: their misinformation tracker. The tracker provides a roundup of the latest chatter in the news regarding misinformation, listing “primary case studies” such as Google and Facebook and “secondary case studies” such as Pinterest and TikTok.

“It’s a novel idea,” Public Knowledge CEO Chris Lewis said about the Superfund, comparing today’s online misinformation to environmental pollution.

Platforms have an incentive to promote content that keep users on platforms, which means they often highlight “content that draws [users] for a reaction … That content isn’t always factual, and it can be hateful,” Lewis said.

In light of this behavior, Lewis said it was fair for tech platforms to be treated like companies compelled to act by the environmental Superfund.

Public Knowledge is still waiting for opposition it expects to the ‘Internet Superfund’

It’s too early to say how an Internet Superfund will be received by big tech.

“I think we’re still waiting to see who disagrees with the idea,” Lewis said, since the proposed superfund was only recently announced.

But one can take guesses. The Wall Street Journal recently reported that Facebook executives shelved internal research suggesting that aspects of their platform exacerbate polarization and sow misinformation and conspiracies.

Mark Zuckerberg, the CEO of the biggest tech platform on the planet, will probably have something to say about an Internet Superfund that interferes with his business model.

Lewis has identified some potential helpers in the halls of Congress. “Certainly, it starts with members of the Senate Commerce Committee and House Energy and Commerce Committee,” he said.

Sens. Roger Wicker, R-Miss., and Anna Eshoo, D-Calif might be likely to support such a proposal, Lewis added.

He also expressed hope that Sen. Maria Cantwell, D-Wash., would take an active interest in any proposed legislation after she signaled approval at a Senate Commerce hearing in May, saying that she was “intrigued” by Public Knowledge’s proposal.

Screenshot of Sen. Maria Cantwell from a Senate Commerce hearing in May 2020

Dealing with bad information is only half the problem: How to support good information?

Just as important as the compulsion part of the proposed Superfund is the development of a new revenue stream to support local journalism.

Policy backing is necessary to ensure the model works, Lewis told me, because he “certainly [doesn’t] want the opportunity to support local journalism… to be subjected the charitable capabilities of a technology company.”

Facebook announced a donation of $1 million to local journalism organizations in March in response to the crisis, and other tech companies have followed suit. The generous actions of tech companies have been criticized by some for appearing to be temporary measures to help ameliorate the so-called Techlash.

However, Lewis also balked at suggesting a fully compulsory approach. “We’re looking for a market-based solution,” he assured me.

The spread of misinformation is sometimes called a second virus, materializing in Facebook’s Newsfeed and YouTube’s “Recommended for you” playlist to target the malicious and unwitting.

“It’s not an easy problem,” admitted Macpherson.