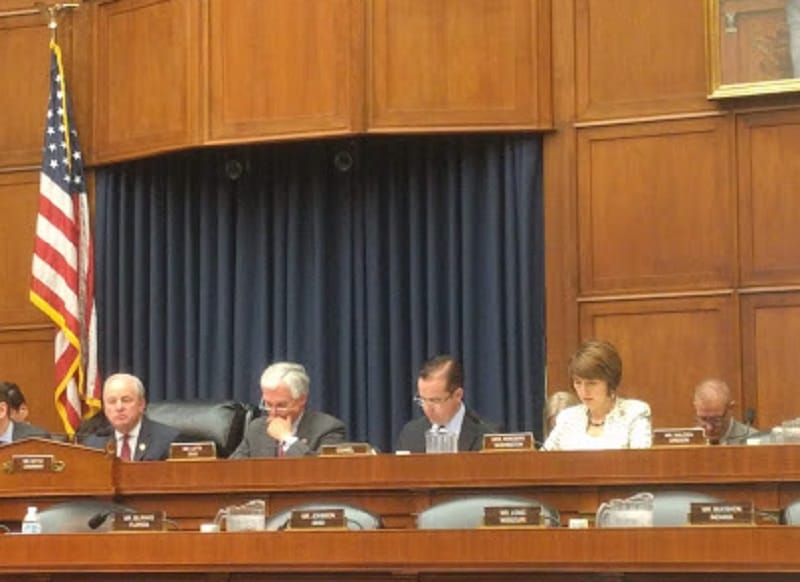

Internet Industry Under the Microscope as House Committee Grills Witnesses on Liability for Online Content

WASHINGTON, October 16, 2019 – The chairman of the House Energy and Commerce Committee on Wednesday said that technology companies need to “step up” and better address challenges surrounding online content. If not, they will likely have to navigate a world in which Section 230 of the Communication D

WASHINGTON, October 16, 2019 – The chairman of the House Energy and Commerce Committee on Wednesday said that technology companies need to “step up” and better address challenges surrounding online content. If not, they will likely have to navigate a world in which Section 230 of the Communication Decency Act is modified.

The internet is more sophisticated than it was when Section 230 was enacted as part of the Telecom Act of 1996, said Chairman Frank Pallone, D-N.J.

But Ranking Member Greg Walden, R-Ore., countered that the internet isn’t something that can be regulated and managed by the government. When discussing Section 230 reform, he said, there needs to be differentiation between illegal content and constitutionally protected speech.

The witnesses present at the hearing echoed the notion that Section 230 needs to stay. Yet the bill does have some issues that should be addressed.

Reddit Co-Founder and CEO Steve Huffman said that even slightly narrowing the constraints of the CDA could undermine the freedom of the internet. At Reddit, for example, individual users play a crucial role in self-moderation of content. Those interactions, he said, helped curb Russian meddling in the 2016 election via social media.

Section 230 needs to return to its original purpose, said Danielle Keats Citron, professor of law at Boston University School of Law. When the bill was first introduced, she said, its goal was to incentivize online platforms to be at the forefront of moderation.

Nowadays, Citron added, Section 230 has created a legal shield that covers the actions conducted by these platforms, including websites that may engage in illegal activities. This problem, she said, requires legal reform and can’t be solved by the market alone.

The CDA has helped regular people by removing much of the gatekeeping for social change, said Corynne McSherry, legal director at Electronic Frontier Foundation. Increasing company liability, she said, could lead to over-censorship and stifle competition as smaller firms would be burdened by regulation.

In contrast, Gretchen Peters, executive director at Alliance to Counter Crime Online, said that tech companies need to face greater liability in order for them to reduce online safety risks. Social media algorithms, she said, are used by terrorist organizations and other nefarious people to further their agendas.

Section 230 is more about liability than freedom of speech, she said. Because of safe harbors and broad interpretation of the bill, tech firms have failed to uphold their end of the bargain to protect people from dangerous online content.

Hany Farid, professor at the University of California, Berkeley, advised the Committee not to view artificial intelligence as the “savior” for content moderation. The billions of contents created every day, he said, would be too much for mere automation to handle. Human action is necessary to uphold a decent standard of online communication.

Google’s Global Head of Intellectual Property Policy Katherine Oyama said that her company’s ability to take action on questionable content is underpinned by the foundation of Section 230’s regulations.

The CDA helps differentiate the US from how countries such as China and Russia approach the internet, she said. Furthermore, weakening online safe harbors could have a recession-like impact on investment and cause companies to suffer more intensely from consumer litigation.

Without Section 230, Oyama added, online platforms would either not be able to filter content at all or over-filter content that needs to be heard, hurting both consumers and businesses.

Member discussion