Big Tech and FTC Under Attack at Senate Hearing

Em McPhie

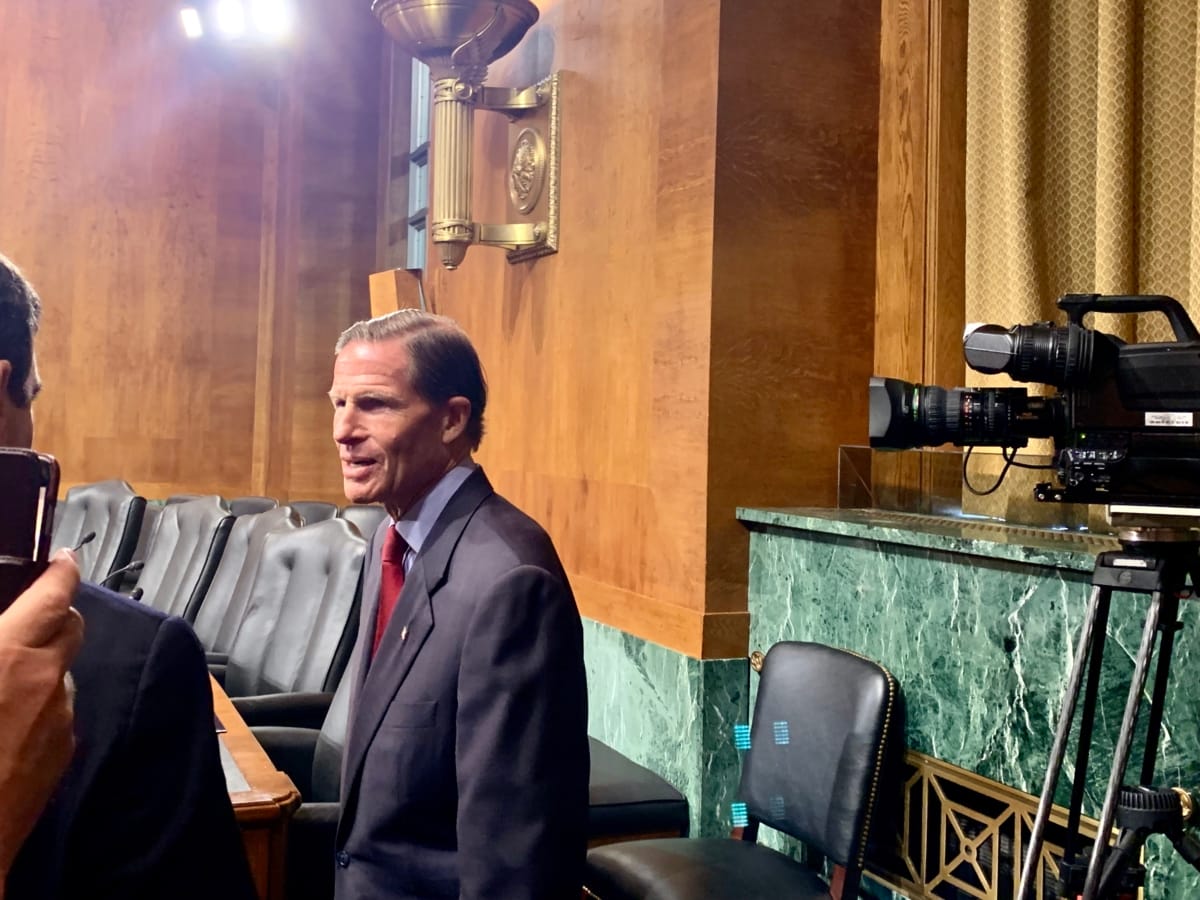

WASHINGTON, July 9, 2019 — YouTube has been “inadequate and abysmally slow in responding” to recent allegations that its algorithms promote child sexualization, said Sen. Richard Blumenthal, D-Conn., at a Senate Judiciary Committee hearing on Tuesday.

If big tech companies don’t take sufficient steps to protect children from exploitation on their platforms, they should be stripped of their protections under Section 230 of the Communications Decency Act, allowing parents and others to sue them, said Sen. Lindsey Graham, R-SC.

Using Section 230 protections as leverage is the “most effective” way of creating prompt change, Graham claimed. Blumenthal agreed, arguing that the uniquely broad immunity given to big tech platforms is part of the reason that they are failing to do more to protect children.

The root of the problem is that companies are prioritizing ad revenue over child safety, said Sen. Josh Hawley, R-Missouri. YouTube has the ability to stop automatically recommending videos featuring children but chooses not to because three quarters of the platform’s traffic comes from the autoplay feature.

The Federal Trade Commission also came under fire at the hearing for failing to enforce the Children’s Online Privacy Protection Act. The FTC can and should take action to protect children by using its authority, under Section Five of the Clayton Act, to hold platforms responsible for the services that they are marketing to children as well as the services that are marketed towards adults but being used by children anyways, said Georgetown Law Professor Angela Campbell.

Platforms such as YouTube fall into the latter category; although Google’s Terms of Service require users to be at least 13 years old, the company clearly knows that younger children are using the platform, but has failed to address this concern, according to Campbell.

Even with increased enforcement, COPPA regulations are inadequate at protecting children because of rapid technological developments and because the safeguards do not apply to children past the age of 13.

In response to questioning from Sen. Blumenthal, all witnesses at the hearing supported updating COPPA regulations to be modeled after the California Consumer Privacy Act, which expands protections to minors up to the age of 16.

The internet is a place of both “wonderful potential and troubling and pervasive darkness,” said Christopher McKenna, founder of internet safety company Protect Young Eyes. To help parents discern between the good and the bad, there is a need for a uniform, independent, and accountable rating system for apps, similar to the system used for video games.

It’s impossible to make the internet a completely safe place for children, so the focus should be on moving from “protection to empowerment, blocking to monitoring, and restrictions to responsibility,” said Stephen Balkam, CEO of the Family Online Safety Institute.

Balkam highlighted the importance of engaging “all parts of the technology ecosystem,” with the government providing oversight and parents and teachers receiving technological training so that they can confidently navigate the online world alongside children.

“Most importantly,” Balkam said, “children must be taught to be good digital citizens.” This includes knowing the consequences of sharing personal information and having the moral and technical tools to deal with the inappropriate material they will “inevitably” encounter—both online and offline.

(Photo of Sen. Blumenthal by Emily McPhie.)

Member discussion