FCC Chairwoman an ‘Optimist’ about AI Despite Ongoing Challenges

The FCC is working rapidly to catch up with AI’s fast evolution.

Jericho Casper

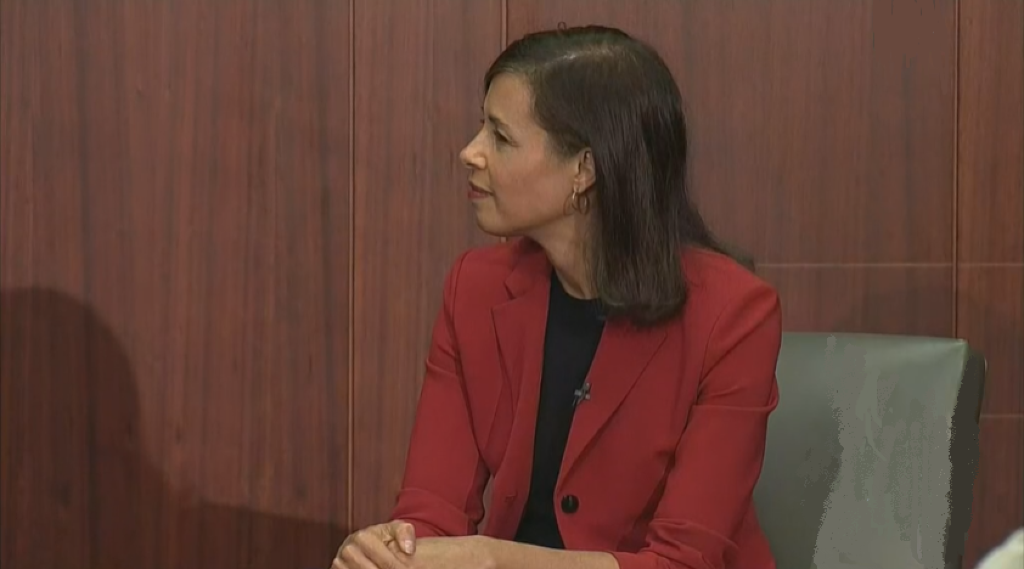

WASHINGTON, Sept. 27, 2024 – Federal Communications Commission Chairwoman Jessica Rosenworcel said Friday that, despite the challenges, she remains an 'optimist' about the potential of artificial intelligence and that her agency is actively planning for its future.

The FCC has been responding swiftly to AI’s growing impact, most recently issuing a legal order Thursday enforcing a $6 million penalty against political consultant Steve Kramer for his role in an AI-generated robocall scam that impersonated the President Biden.

“The FCC kicked into high gear. We acted fast,” Rosenworcel explained in her remarks at the 7th Annual Berkeley Law AI Institute on Friday. As the FCC plays catch up with AI-driven misinformation, Rosenworcel stressed the need for transparency and preparation for the technology’s future.

In response to Kramer’s robocalls, the FCC immediately adopted a unanimous ruling that made clear that artificial or prerecorded robocalls using AI voice cloning technology violated the Telephone Consumer Protection Act.

Rosenworcel detailed the FCC’s efforts, adding, “The carrier ultimately paid $1,000,000 and put in place policies to stop these calls going forward. Our traceback efforts also led to the individual behind the call itself—Steve Kramer. We proposed a $6,000,000 fine. He has not responded. So yesterday at the FCC we adopted a Forfeiture Order to enforce it in court,” she explained.

“With these actions, we made clear that if you flood our phones with this junk, we will find you and you will pay,” Rosenworcel emphasized.

The FCC has also been active in addressing AI's impact more broadly, proposing new transparency standards that would require disclosure when AI technology is used in political ads on radio and television. However, the proposal was notably absent from the agency’s tentative October meeting agenda, despite pressure from senators and public concern.

Rosenworcel highlighted a recent Pew survey revealing widespread anxiety about AI’s role in elections. “The American people, by a margin of 82 to 7, are concerned that AI-generated content will spread misinformation this campaign season, and it cuts equally across political lines,” she noted. The same survey found that the public is eight times more likely to believe AI is being used for bad in campaigns rather than for good.

“At the same time, we are asking what we can do to support this technology and what we can do to manage its risks,” Rosenworcel said. “We want to know what it means for the future of work. We are concerned about models that inherit prejudices. We want to understand what it means for the future of humanity.”

Member discussion