Social Media Platforms Should Increase Algorithm Transparency, Say Broadband Breakfast Live Online Panelists

July 16, 2020 — Social media companies need to “open up” their algorithms, said participants in a Broadband Breakfast panel on Wednesday. The event, titled “Public Input on Platform Algorithms: The Role of Transparency and Feedback in High-Tech,” saw participants discuss the role of transparency in

July 16, 2020 — Social media companies need to “open up” their algorithms, said participants in a Broadband Breakfast panel on Wednesday.

The event, titled “Public Input on Platform Algorithms: The Role of Transparency and Feedback in High-Tech,” saw participants discuss the role of transparency in the algorithms utilized by social media companies.

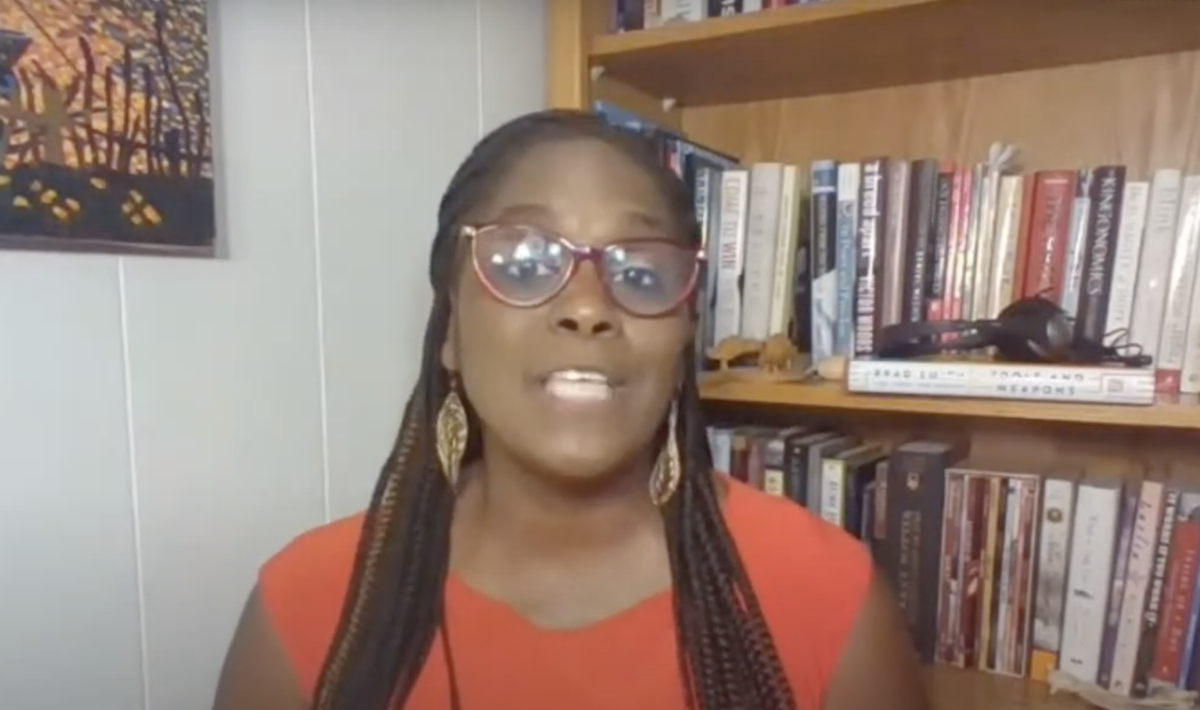

Nicol Turner-Lee, director of the Brookings Institution Center for Technology Innovation, said that tech companies must be more transparent in their practices so that users can decode the content that the platforms present to them.

Turner-Lee said that transparency is important in order to decrease discrimination in all uses of algorithms, such as those used in awarding high school diplomas.

“They use an algorithm in lieu of an in-person test to make the determination as to a student’s ability to qualify for the diploma,” she said. “There was a huge decline among students, and potentially discriminatory outputs.”

Screenshot of Broadband Breakfast Live Online panelists

Harold Feld, senior vice president of Public Knowledge, agreed that such race-based algorithmic discrimination is not rare.

“There’s been a fair amount of documentation that shows that there is a bias,” he said. “Posts by African Americans are much more likely to be considered violent or dangerous than those by white users.”

However, Feld said that removing Section 230 of the Communications Decency Act is not a solution to this problem.

“You could take away 230 and nothing that we’ve said about the harms would make a damn bit of difference,” he said.

Nathalie Maréchal, a senior policy analyst at Ranking Digital Rights, discussed several suggestions for social media transparency.

“What we’re looking for in this area is first for companies to be clear about their rules for ad content … and second, we’re looking for companies to shape their users online experiences with the objective of those algorithms or what data is used,” she said.

Section 230 has become a flash point in an ongoing discussion about the role and rules of content moderation online. When several of President Donald Trump’s tweets were flagged as both glorifying violence and as being misleading, Trump hit back by signing an executive order attempting to strip Section 230 of its power.

It is unclear what power, if any, the executive order will have.