New York Signs Social Media Algorithm Block for Minors

The bill requires social media platforms to cease using algorithms for children's online accounts.

Taormina Falsitta

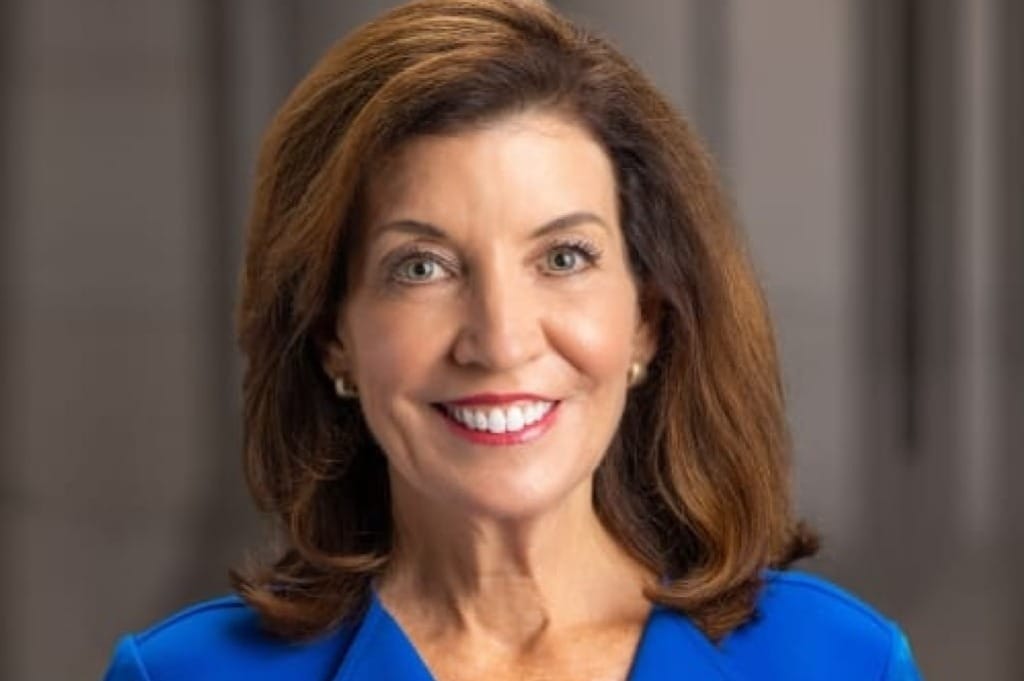

June 25, 2024 – On Thursday, New York Gov. Kathy Hochul signed a bill empowering parents to block algorithm-suggested social media content for their children, addressing concerns about addiction, exploitation, and the harvesting of data.

Hochul said that New York is working to protect its children, claiming that the state allows parents to “tell the companies that you are not allowed to do this, you don’t have a right to do this, that parents should have say over their children’s lives and their health, not you.”

The law, called the Stop Addictive Feeds Exploitation for Kids Act, requires social media platforms to limit content for minors to show only that content posted by accounts that they already follow. In other words, the law restricts the use of targeted algorithms, which suggest content based on previous browsing, for minors. It also requires social media companies to restrict notifications between midnight and 6AM.

The Parental Consent Provision on these apps can be turned off by providing verifiable parental consent.

Opposition from tech companies, including Apple, Google, and Meta, claim that the law impedes on the First Amendment because it could impede on a person’s ability to express themselves. Earlier cases on age verification laws in California, Arkansas, Ohio, and Utah faced similar challenges for online activities which were tried in court for violating First Amendment rights.

When this law is put into effect it will have “created a way for the government to track what sites people visit and their online activity by forcing websites to censor all content unless visitors provide an ID to verify their age,” trade association NetChice claimed.

Additionally, the Computer and Communication Industry Association claims that the targeted algorithm serves to protect teens from harmful content and customize content for younger users.

“While the intent is to reduce the potential for excessive scrolling, eliminating algorithms could lead to a random assortment of content being delivered to users, potentially exposing them to inappropriate material,” CCIA told lawmakers.

On Friday U.S. District Judge P. Casey Pitts denied Google’s motion to dismiss claims from parents who filed a lawsuit against Google, claiming that the tech giant's online tracking of children’s web activity for targeted advertising is both harmful and unlawful.

The suit, brought forth by six minors under the age of 13, asserts that Google violated their privacy rights by collecting personal information through various apps without obtaining parental consent before tracking them. The claim was that Google violated the Children’s Online Privacy Protection Act of 1998 along with other laws.

Google claims that it had no knowledge of data collection. However, allegations claim that Google reviewed all apps submitted under its "Designed for Families" program, which indicates knowledge of the issue.

Plaintiffs are seeking injunctive relief that would require Google to permanently delete or sequester all collected personal information from children without parental consent.

Children’s data privacy is becoming a larger focus of United States legislation. On June 18, California Gov. Gavin Newsom said that he is working on a bill to prohibit students from using phones in school, saying that he will support the move to limit phone use in schools, in order to promote focus on education.

California’s Age Appropriate Design Code Act, passed in August 2022, requires that online platforms proactively prioritize the privacy of underage users by default and by design. Another approach focuses on age verification, such as Utah legislation that will require social media companies to verify the age of Utah residents before allowing them to create or keep accounts. The bill was signed by Gov. Spencer Cox in March of this year.

The federal Kids Online Safety Act, passed in July 2023, creates a “duty of care” requirement for platforms to shield children from harmful content. KOSA requires social media sites to put in place safeguards that protect users under the age of 17 from content that promotes harmful behaviors, such as suicide and eating disorders.

Member discussion