Senators Confront Benefits of AI in Finance and Insurance Markets

Witnesses expressed support for Trump’s AI Action Plan

Jennifer Michel

WASHINGTON, July 30, 2025 – Senators in the Subcommittee on Securities, Insurance, and Investment of the Committee on Banking, Housing, and Urban Affairs met in a hearing Wednesday to address the accelerating use of artificial intelligence in financial services and insurance markets.

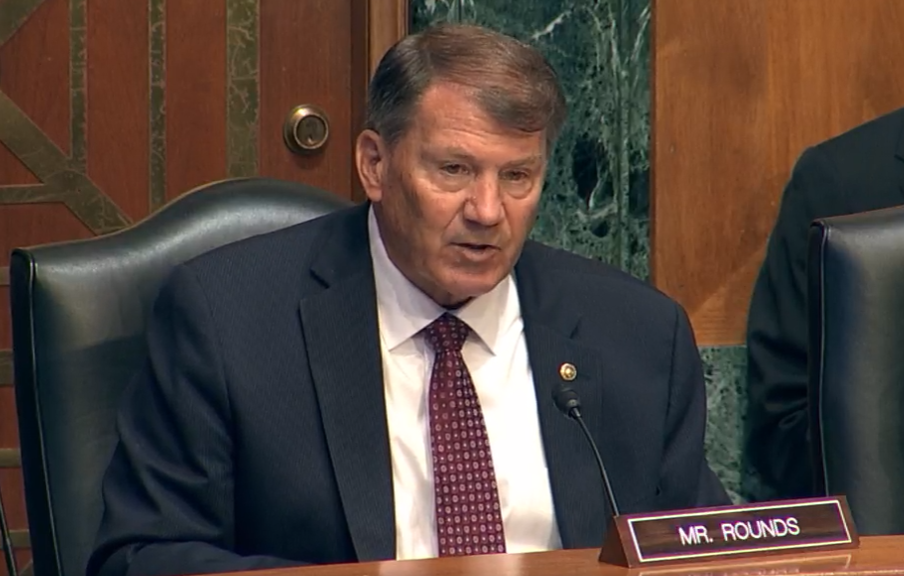

The hearing, entitled “Guardrails and Growth: AI’s Role in Capital and Insurance Markets,” began with the reintroduction of the bipartisan Unleashing AI Innovation and Financial Services Act by Chairman Mike Rounds, R-South Dakota.

First introduced by Rounds in 2024, the act calls for each financial regulatory agency to “establish a regulatory sandbox that allows regulated entities to experiment with AI test projects without unnecessary or unduly burdensome regulation or expectation of retroactive enforcement actions.”

Rounds expressed in the hearing that the use of AI in the financial sector has proven fruitful, citing the finding that AI driven security enhancements have boosted fraud detection rates by up to 300% and prevented over $50 billion in fraud in the past three years.

“This is exactly the kind of progress we should be encouraging,” said Rounds. “That's why today I'm reintroducing the Unleashing AI innovation and Financial Services Act… [a] bipartisan bill that would create a venue for financial institutions and regulators to work together to test AI projects for deployment. By creating a safe space for experimentation, we can help firms innovate and regulators learn without applying outdated rules that don't fit today's technology.”

In the testimonies that followed, the witnesses all noted the positive transformation AI has and can bring to the financial sector, but warned about its emerging risks.

According to David Cox, vice president for artificial intelligence models at IBM Research, generative AI and large language models (LLMs) can be used for regulatory compliance and audit trail generation; summarization of lengthy prospectuses and client contracts; faster, more personalized client service through natural language interfaces; automated reporting, complaint resolution, and workflow optimization; and enhanced developer productivity and IT resilience.

However, Cox warned that without transparency, an open-AI ecosystem, and a risk-based approach to secure data, the social and economic benefits of this technology will not be widespread. Currently, there is a lack of clarity about training data for LLMs, challenges in tracking AI decision-making processes, and a potential for bias in AI systems and hallucinations in generative models.

In agreement with the priorities established in President Donald Trump’s recent AI Action Plan, Cox claimed that “a healthy, competitive AI market ecosystem requires open source AI to ensure America’s AI future is controlled by the many, not only a select few.”

Sharing this perspective, witness Kevin Kalinich, global collaboration leader of intangible assets at Aon, claimed that the AI Action Plan “highlights the benefits of comprehensive strategy to bolster the US leadership in artificial intelligence. The plan recognizes the urgency to accelerate innovation, incentives to build AI infrastructure and lead in international diplomacy and security, all while acknowledging the need to address potential risks and promote trustworthy AI.”

Cox and Kalinich also both emphasized the importance of a national AI policy, a key component of Trump’s AI agenda.

“If there isn't alignment and harmony on the regulatory front, then it reduces complexity and won't bring the balance between innovation and safety,” said Cox. “We wouldn't want to see [regulation] at a state by state level, because, again, that would probably impede innovation and keep some players out from actually experimenting, and so the greater clarity we can have, the greater certainty that we can have, in general on markets, you're going to see more participation and more embracing of transparency.”

The hearing made clear that AI can improve capital markets analysis, enhance fraud detection, revolutionize insurance underwriting, and increase operational efficiency. However, regulatory frameworks that both protect consumers and do not stifle innovation must be advanced, especially as AI’s potential to disrupt the job market and create cybersecurity threats increases.

In order to promote an environment that appropriately balances guardrails and innovation, Kalinich brought up the importance of public-private partnerships.

“We welcome public policy that will ensure that liability frameworks are clear, that insurance markets remain viable, and that AI developers and end users alike can act with confidence,” Kalinich said. “Let's work together to ensure AI becomes not just a technological breakthrough, but a force for good, guided by insight, trust and shared responsibility.”

Member discussion