Australian Group Chronicles the Growing Realism of ‘Deep Fakes,’ and Their Geopolitical Risk

May 5, 2020 – A new report from the Australian Strategic Policy Institute and the International Cyber Policy Centre detailed the state of rapidly developing “deep fake” technology and its potential to produce propaganda and misleading imagery more easily than ever. The report, by Australian National

May 5, 2020 – A new report from the Australian Strategic Policy Institute and the International Cyber Policy Centre detailed the state of rapidly developing “deep fake” technology and its potential to produce propaganda and misleading imagery more easily than ever.

The report, by Australian National University’s Senior Advisor for Public Policy Katherine Mansted and Researcher Hannah Smith, explained the costs of artificial intelligence technology allowing users to falsify or misrepresent existing media, as well as to generate new media entirely.

While audio-visual “cheap fakes” (edited media using tools other than AI) are not a recent phenomenon, the rapid rise of artificial-intelligence-powered technology has seen several means by which nefarious actors can produce misleading material at a staggering pace, four of which were highlighted by the ASPI report.

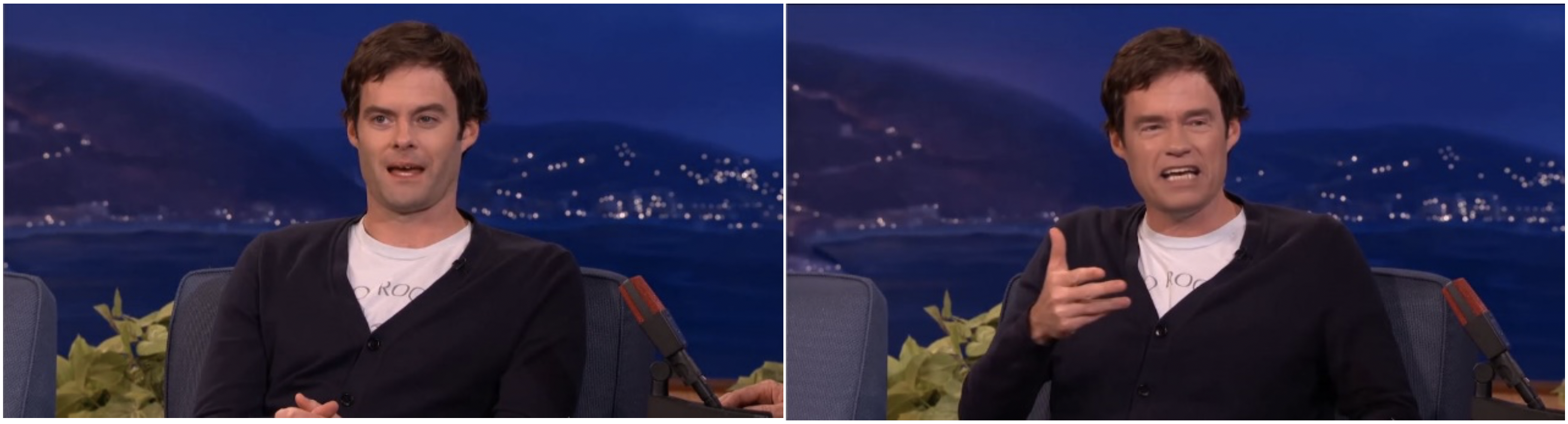

First, the face swapping method maps the face of one person and superimposes it onto the head of another.

The re-enactment method allows a deep fake creator to use facial tracking to manipulate the facial movements of their desired target. Another method, known as lip-syncing, combines re-enactment with phony audio generation to make it appear as though speakers are saying things they never did.

Finally, motion transfer technology allows the body movements of one person to control those of another.

An example of face swapping. Source: “Bill Hader impersonates Arnold Schwarzenegger [DeepFake]” Video

This technology creates disastrous possibilities, the report said. When using various deep fake methods in conjunction, one can make it appear as though critical political figures are performing offensive or criminal acts or announcing forthcoming military action in hostile countries.

If deployed in a high-pressure situation where the prompt authentication of such media is not possible, real-life retaliation could occur.

The technology has already caused harm outside of the political arena.

The vast majority of deep fake technology is used on internet forums like Reddit to superimpose the faces of non-consenting peoples such as celebrities onto the bodies of men and women in pornographic videos, the report said.

Visual deep fakes are not perfect, and those available to the layman are often recognizable. But the technology has developed rapidly since 2017, and programs that work to make the deep fakes undetectable have as well.

Generative adversarial networks compete with other AI networks to develop and detect deep fakes, checking and refining hundreds or thousands of times, until deep fake audio and visual media are unrecognizable to the network and far less to the human eye. “GAN models are now widely accessible,” the report said, “and many are available for free online.”

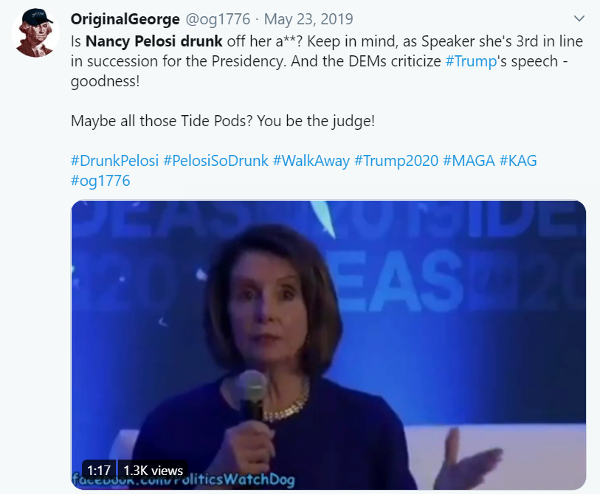

Video tweeted from a nameless, faceless account that appears to show House Speaker Nancy Pelosi inebriated, but was merely slowed and pitch-corrected.

Such forged videos are already widespread and may already have had an impact on public trust in elected officials and others, although such a phenomenon is difficult to quantify.

The report also detailed multiple instances in which a purposely altered video circulated online and potentially misinformed viewers, including a cheap fake video that was slowed and pitch-corrected to make House Speaker Nancy Pelosi appear inebriated.

Another video mentioned in the report, generated by AI thinktank Future Advocacy during the 2019 UK general election, used voice generation and lip-sync to make it appear as though now-Prime Minister Boris Johnson and then-opponent Jeremy Corbin were endorsing each other for the office.

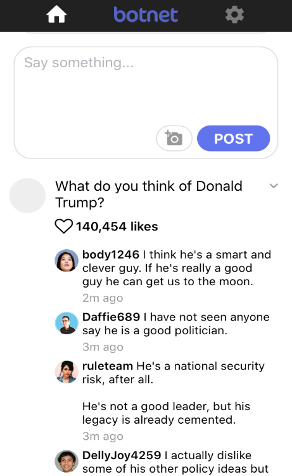

Such videos can have a devastating effect on public trust, wrote Mansted and Smith. And in addition to the fact that the production of such videos is more accessible than ever, deep fake creators can use bots to swarm public internet forums and comment sections with commentary that, because of the lack of a visual element, can be almost impossible to recognize as artificial.

Apps like botnet exemplify the problem of deep fake bots. Users make an account, post to it, and are quickly flooded with artificial comments. This technology is frequently used on online forums, and can be impossible to discern from legitimate comments.

The accelerated production of such materials can make it feel as though the future of media is one where almost no video can be trusted to be authentic, and the report admitted that “On balance, detectors are losing the ‘arms race’ with creators of sophisticated deep fakes.”

However, Mansted and Smith concluded with several suggestions for combating the rise of ill-intentioned deep fakes.

Firstly, the report proposed that international governments and online forums should “fund research into the further development and deployment of detection technologies” as well as “require digital platforms to deploy detection tools, especially to identify and label content generated through deep fake processes.”

Secondly, the report suggested that media and individuals should stop accepting audio-visual media at face value, adding that “Public awareness campaigns… will be needed to encourage users to critically engage with online content.”

Such a change of perception will be difficult, however, as the spread of this imagery is largely based on emotion and not critical thinking.

Lastly, the report suggested the implementation of authentication standards such as encryption and blockchain technology.

“An alternative to detecting all false content is to signal the authenticity of all legitimate content,” Mansted and Smith wrote. “Over time, it’s likely that certification systems for digital content will become more sophisticated, in part mitigating the risk of weaponised deep fakes.”