Automated Content Moderation’s Main Problem is Subjectivity, Not Accuracy, Expert Says

With millions of pieces of content generated daily, platforms are increasingly relying on AI for moderation.

Em McPhie

WASHINGTON, February 2, 2023 — The vast quantity of online content generated daily will likely drive platforms to increasingly rely on artificial intelligence for content moderation, making it critically important to understand the technology’s limitations, according to an industry expert.

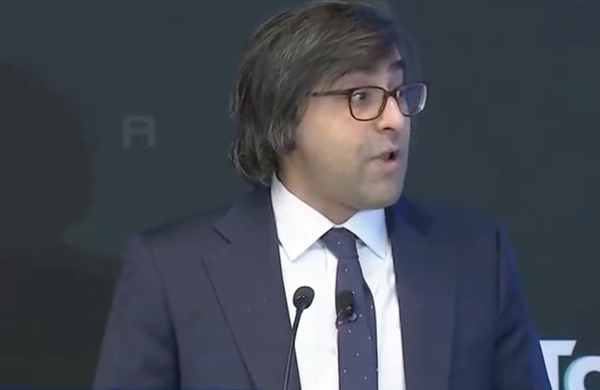

Despite the ongoing culture war over content moderation, the practice is largely driven by financial incentives — so even companies with “a speech-maximizing set of values” will likely find some amount of moderation unavoidable, said Alex Feerst, CEO of Murmuration Labs, at a Jan. 25 American Enterprise Institute event. Murmuration Labs works with tech companies to develop online trust and safety products, policies and operations.

If a piece of online content could potentially lead to hundreds of thousands of dollars in legal fees, a company is “highly incentivized to err on the side of taking things down,” Feerst said. And even beyond legal liability, if the presence of certain content will alienate a substantial number of users and advertisers, companies have financial motivation to remove it.

However, a major challenge for content moderation is the sheer quantity of user-generated online content — which, on the average day, includes 500 million new tweets, 700 million Facebook comments and 720,000 hours of video uploaded to YouTube.

“The fully loaded cost of running a platform includes making millions of speech adjudications per day,” Feerst said.

“If you think about the enormity of that cost, very quickly you get to the point of, ‘Even if we’re doing very skillful outsourcing with great accuracy, we’re going to need automation to make the number of daily adjudications that we seem to need in order to process all of the speech that everybody is putting online and all of the disputes that are arising.’”

Automated moderation is not just a theoretical future question. In a March 2021 congressional hearing, Meta CEO Mark Zuckerberg testified that “more than 95 percent of the hate speech that we take down is done by an AI and not by a person… And I think it’s 98 or 99 percent of the terrorist content.”

Dealing with subjective content

But although AI can help manage the volume of user-generated content, it can’t solve one of the key problems of moderation: Beyond a limited amount of clearly illegal material, most decisions are subjective.

Much of the debate surrounding automated content moderation mistakenly presents subjectivity problems as accuracy problems, Feerst said.

For example, much of what is generally considered “hate speech” is not technically illegal, but many platforms’ terms of service prohibit such content. With these extrajudicial rules, there is often room for broad disagreement over whether any particular piece of content is a violation.

“AI cannot solve that human subjective disagreement problem,” Feerst said. “All it can do is more efficiently multiply this problem.”

This multiplication becomes problematic when AI models are replicating and amplifying human biases, which was the basis for the Federal Trade Commission’s June 2022 report warning Congress to avoid overreliance on AI.

“Nobody should treat AI as the solution to the spread of harmful online content,” said Samuel Levine, director of the FTC’s Bureau of Consumer Protection, in a statement announcing the report. “Combatting online harm requires a broad societal effort, not an overly optimistic belief that new technology — which can be both helpful and dangerous — will take these problems off our hands.”

The FTC’s report pointed to multiple studies revealing bias in automated hate speech detection models, often as a result of being trained on unrepresentative and discriminatory data sets.

As moderation processes become increasingly automated, Feerst predicted that the “trend of those problems being amplified and becoming less possible to discern seems very likely.”

Given those dangers, Feerst emphasized the urgency of understanding and then working to resolve AI’s limitations, noting that the demand for content moderation will not go away. To some extent, speech disputes are “just humans being human… you’re never going to get it down to zero,” he said.

Member discussion