Congress Should Mandate AI Guidelines for Transparency and Labeling, Say Witnesses

Transparency around data collection and risk assessments should be mandated by law, especially in high-risk applications of AI.

Jake Neenan

WASHINGTON, September 12, 2023 – The United States should enact legislation mandating transparency from companies making and using artificial intelligence models, experts told the Senate Commerce Subcommittee on Consumer Protection, Product Safety, and Data Security on Tuesday.

It was one of two AI policy hearings on the hill Tuesday, with a Senate Judiciary Committee hearing, as well as an executive branch meeting created under the National AI Advisory Committee.

The Senate Commerce subcommittee asked witnesses how AI-specific regulations should be implemented and what lawmakers should keep in mind when drafting potential legislation.

“The unwillingness of leading vendors to disclose the attributes and provenance of the data they’ve used to train models needs to be urgently addressed,” said Ramayya Krishnan, dean of Carnegie Mellon University’s college of information systems and public policy.

Addressing problems with transparency of AI systems

Addressing the lack of transparency might look like standardized documentation outlining data sources and bias assessments, Krishnan said. That documentation could be verified by auditors and function “like a nutrition label” for users.

Witnesses from both private industry and human rights advocacy agreed legally binding guidelines – both for transparency and risk management – will be necessary.

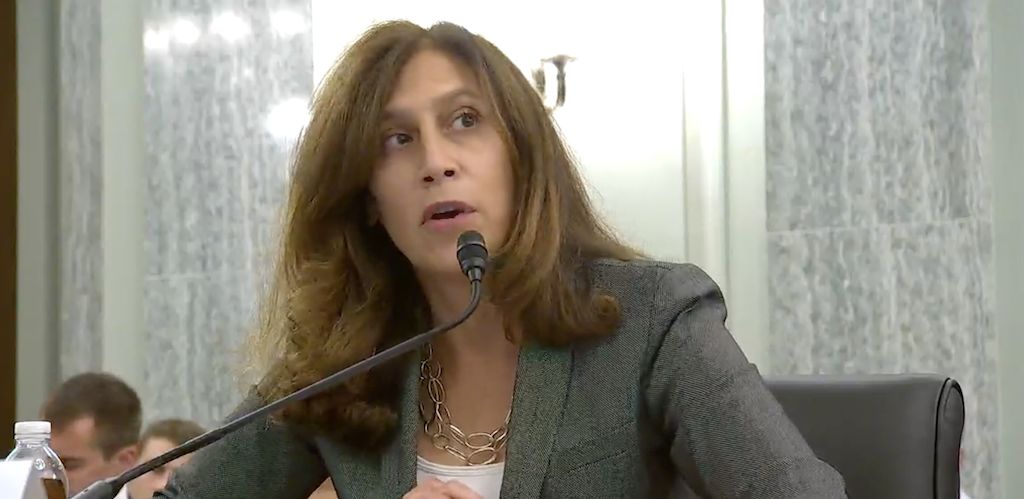

Victoria Espinel, CEO of the Business Software Alliance, a trade group representing software companies, said the AI risk management framework developed in March by the National Institute of Standards and Technology was important, “but we do not think it is sufficient.”

“We think it would be best if legislation required companies in high-risk situations to be doing impact assessments and have internal risk management programs,” she said.

Those mandates – along with other transparency requirements discussed by the panel – should look different for companies that develop AI models and those that use them, and should only apply in the most high-risk applications, panelists said.

That last suggestion is in line with legislation being discussed in the European Union, which would apply differently depending on the assessed risk of a model’s use.

“High-risk” uses of AI, according to the witnesses, are situations in which an AI model is making consequential decisions, like in healthcare, hiring processes, and driving. Less consequential machine-learning models like those powering voice assistants and autocorrect would be subject to less government scrutiny under this framework.

Labeling AI-generated content

The panel also discussed the need to label AI-generated content.

“It is unreasonable to expect consumers to spot deceptive yet realistic imagery and voices,” said Sam Gregory, director of human right advocacy group WITNESS. “Guidance to look for a six fingered hand or spot virtual errors in a puffer jacket do not help in the long run.”

With elections in the U.S. approaching, panelists agreed mandating labels on AI-generated images and videos will be essential. They said those labels will have to be more comprehensive than visual watermarks, which can be easily removed, and might take the form of cryptographically bound metadata.

Labeling content as being AI-generated will also be important for developers, Krishnan noted, as generative AI models become much less effective when trained on writing or images made by other AIs.

Privacy around these content labels was a concern for panelists. Some protocols for verifying the origins of a piece of content with metadata require the personal information of human creators.

“This is absolutely critical,” said Gregory. “We have to start from the principle that these approaches do not oblige personal information or identity to be a part of them.”

Separately, the executive branch committee that met Tuesday was established under the National AI Initiative Act of 2020, is tasked with advising the president on AI-related matters. The NAIAC gathers representatives from the Departments of State, Defense, Energy and Commerce, together with the Attorney General, Director of National Intelligence, and Director of Science and Technology Policy.