Workforce Training Needed to Address Artificial Intelligence Bias, Researchers Suggest

Building on the Blueprint for an AI Bill of Rights by the White House Office of Science and Technology Policy.

Zoey Howell-Brown

WASHINGTON, October 24, 2022–To align with the newly released White House guide on artificial intelligence, Stanford University’s director of policy said at an October Brookings Institution event last week that there needs to be more social and technical workforce training to address artificial intelligence biases.

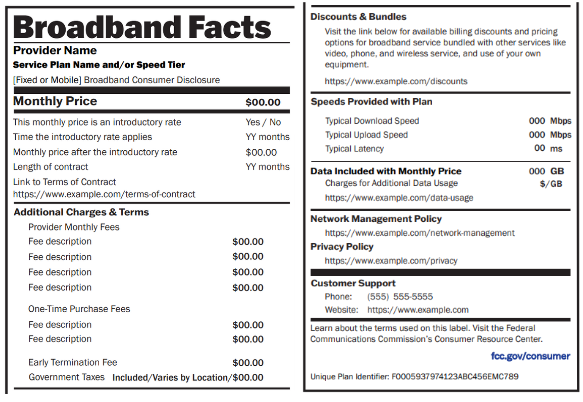

Released on October 4, the Blueprint for an AI Bill of Rights framework by the White House’s Office of Science and Technology Policy is a guide for companies to follow five principles to ensure the protection of consumer rights from automated harm.

AI algorithms rely on learning the users behavior and disclosed information to customize services and advertising. Due to the nature of this process, algorithms carry the potential to send targeted information or enforce discriminatory eligibility practices based on race or class status, according to critics.

Risk mitigation, which prevents algorithm-based discrimination in AI technology is listed as an ‘expectation of an automated system’ under the “safe and effective systems” section of the White House framework.

Experts at the Brookings virtual event believe that workforce development is the starting point for professionals to learn how to identify risk and obtain the capacity to fulfill this need.

“We don’t have the talent available to do this type of investigative work,” Russell Wald, policy director for Stanford’s Institute for Human-Centered Artificial Intelligence, said at the event.

“We just don’t have a trained workforce ready and so what we really need to do is. I think we should invest in the next generation now and start giving people tools and access and the ability to learn how to do this type of work.”

Nicol Turner-Lee, senior fellow at the Brookings Institution, agreed with Wald, recommending sociologists, philosophers and technologists get involved in the process of AI programming to align with algorithmic discrimination protections – another core principle of the framework.

Core principles and protections suggested in this framework would require lawmakers to create new policies or include them in current safety requirements or civil rights laws. Each principle includes three sections on principles, automated systems and practice by government entities.

In July, Adam Thierer, senior research fellow at the Mercatus Center of George Mason University stated that he is “a little skeptical that we should create a regulatory AI structure,” and instead proposed educating workers on how to set best practices for risk management, calling it an “educational institution approach.”

Member discussion