Content Moderation Experts Discuss Future of Section 230 in Broadband Breakfast Live Online Event

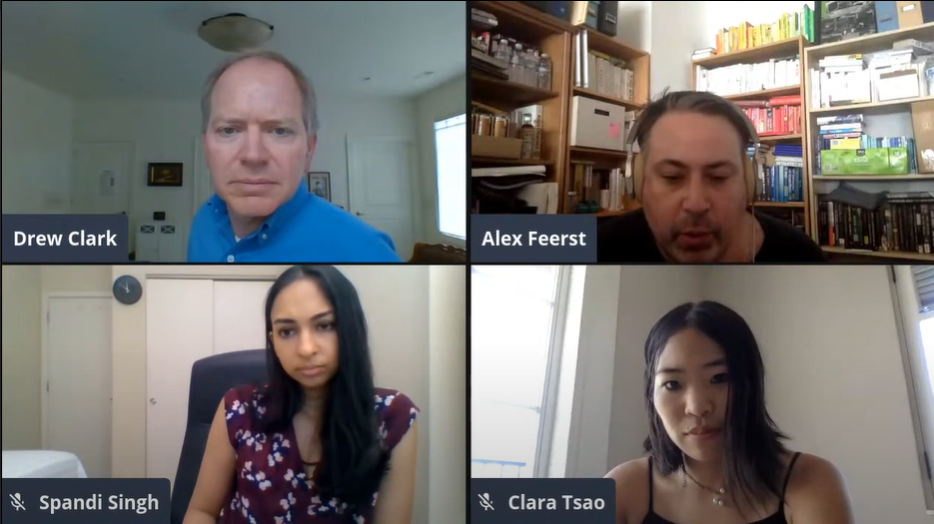

July 6, 2020 — The role of a content moderator is to be both a judge and a janitor of the internet, according to Alex Feerst, general counsel at Neuralink. In a Broadband Breakfast Live Online event on Wednesday, a panel of content moderation experts joined moderator Drew Clark, editor and publisher

Jericho Casper

July 6, 2020 — The role of a content moderator is to be both a judge and a janitor of the internet, according to Alex Feerst, general counsel at Neuralink.

In a Broadband Breakfast Live Online event on Wednesday, a panel of content moderation experts joined moderator Drew Clark, editor and publisher of Broadband Breakfast, to discuss the future of content moderation in the wake of heightened controversy suurounding Section 230 of the Communications Decency Act.

The event was the first in a three part series on the power, impact and scope of Section 230.

Speaking at the beginning of the panel, Broadband Breakfast Assistant Editor Emily McPhie said Section 230 is what empowers websites to make content moderation decisions. McPhie said that Section 230 reforms would likely make it harder for platforms to moderate selective content.

The job of a content moderator has been altered and expanded through the years, Clark said.

Today, essentially all platforms moderate more content than anyone would ever imagine, Feerst said. Section 230 provides a baseline on top of which platforms can build rule sets, he added, which attempt to actively foster desired communities.

Content moderation is a difficult task, as it is nearly impossible to satisfy everyone at once.

Feerst argued intersubjectivity becomes an issue, as platforms attempt to mediate between all different types of opinions on what should be accepted and what should not.

“Because the content moderation process is so difficult and because approaches vary, it is necessary platforms provide transparency,” said Spandi Singh, a policy analyst at New America’s Open Technology Institute. The institute fights for greater transparency and accountability in content moderation practices.

Screenshot of panelists from the Broadband Breakfast Live Online webcast

Further, the panel of moderation experts found a disconnect between their day-to-day efforts and the general public’s understanding of the work they do.

“I would talk to people and felt very misunderstood, because people thought, ‘How hard can it be?’” Feerst said.

To combat this, Clara Tsao, interim co-executive director of the Trust and Safety Professional Association, said that she worked to create a community to provide support for content moderation professionals, while also working to train individuals and centralize resources.

According to Tsao, four basic types of content moderation jobs exist: at-scale user generated content moderators and contractors, monetized content moderators, legal operations processors, and content policy developers.

Full-time content reviewers and contractors are subject to the poorest working conditions of the bunch, she said.

Although the work is taxing, Feerst emphasized the importance of humans doing this labor, rather than relying on a fully automated process.

Currently, artificial intelligence is used by moderators to draw in pools of questionable content for human review and final decision-making.

“Areas where there is automated decision making are few,” Feerst said. “I think that’s good — humans are far better at this than AI.”

“Not a lot of data around human versus AI content moderation exists,” Singh said. The evidence that does exist shows that automated tools are not as accurate in moderating content as humans, she added.

“It would be nice to do this at scale, but there really is not a way to do so,” Feerst said.

“The space is only going to get more complicated,” Tsao said, arguing that users should have a voice and be able to advocate for their own beliefs in the matter.

If platforms continue to hold so much speech, they must be willing to accept the costs of content moderation, Feerst said.

“It’s going to be expensive if you want to treat employees well,” he said.

Broadband Breakfast’s series on Section 230 will continue next Wednesday with a discussion of the statute in the context of an election year.

Member discussion