Reforming Section 230 Won’t Help With Content Moderation, Event Hears

Government is ‘worst person’ to manage content moderation.

WASHINGTON, April 11, 2022 — Reforming Section 230 won’t help with content moderation on online platforms, observers said Monday.

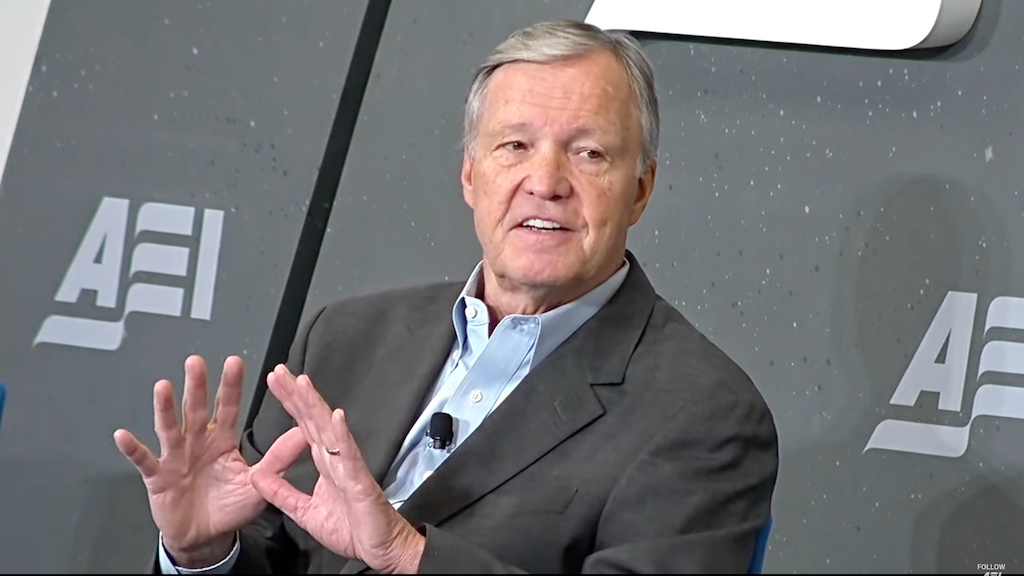

“If we’re going to have some content moderation standards, the government is going to be, usually, the worst person to do it,” said Chris Cox, a member of the board of directors at tech lobbyist Net Choice and a former Congressman.

These comments came during a panel discussion during an online event hosted by the American Enterprise Institute that focused on speech regulation and Section 230, a provision in the Communications Decency Act that protects technology platforms from being liable for posts by their users.

“Content moderation needs to be handled platform by platform and rules need to be established by online communities according to their community standards,” Cox said. “The government is not very competent at figuring out the answers to political questions.”

There was also discussion about the role of the first amendment in content moderation on platforms. Jeffrey Rosen, a nonresident fellow at AEI, questioned if the first amendment provides protection for content moderation by a platform.

“The concept is that the platform is not a publisher,” he said. “If it’s not [a publisher], then there’s a whole set of questions as to what first amendment interests are at stake…I don’t think that it’s a given that the platform is the decider of those content decisions. I think that it’s a much harder question that needs to be addressed.”

Late last year, experts said that it is not possible for platforms to remove from their site all content that people may believe to be dangerous during a Broadband Breakfast Live Online event. However some, like Alex Feerst, the co-founder of the Digital Trust and Safety Partnership, believe that platforms should hold some degree of liability for the content of their sites as harm mitigation with regards to dangerous speech is necessary where possible.